AssistDeploy 1.2 Is Now Live

Evening!

AssistDeploy, our attempt to fully automate SSIS Deployments, is not yet a week old, yet we’re already on release 1.2, which is our 4th release. If you’re wondering how we can be on a 4th release, when the number is .2, I suggest you have a read up on SemVer.

Unlike the previous post, this will be brief. But like that post, I’m going to delve into why I’ve made the changes before what, so that the context is understood.

What most IT projects attempt to achieve is

- take some knowledge of a subject matter expert

- turn that into a computer program

- profit

Case in point – a lecturer at university told me his first ever project manager role was to guide a team of developers into taking the knowledge of a bunch of retired pilots whose job it was to determine the maximum height of buildings around an airport and create some software where the retired pilot could go back to being retired and the software do the job for them.

A slightly more trivial task would be taking something like…. oh I don’t know…. an ispac and a json file and the knowledge of how to deploy a SSIS project and automating that using PowerShell. But there is a problem here – by creating an artefact like the json file I have inadvertently become a silo of knowledge how this json file should look, and the type of error messages that will come out of an incorrectly formatted json file. Even if the json is valid, the values of the keys could be wrong (ie booleans in stead of strings etc). And most of the errors manifest themselves as failed stored procedure executions, which is less useful to people than a straight-up “this is what the problem is” error message.

With that in mind, in 1.2, I have made 2 significant changes, focused around the function Import-Json:

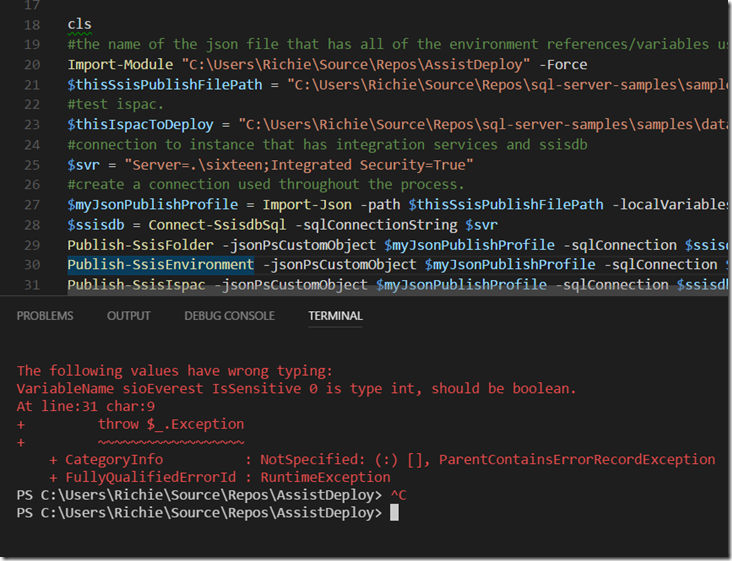

- Moved Import-Json out of each function – previously each function would take the file path of the json file and import that into a custom PowerShell object. Whilst this made sense early on I suddenly realised that this was wasteful in terms of time spent – it only needs to be loaded into an object once and then can be passed directly into each function that needs it. So whereas once functions would pass in the file like this “-ssisPublishFilePath C:\blah.json” we now run Import-Json first and pass to a function - “-jsonPsCustomObject $myJsonPublishProfile”

- Added testing to json file in Import-Json – another benefit of importing the json file before we do anything is now we can validate it. There’s two steps to the validation

- is the json file correct?

- do PowerShell variables exist in the session to replace the values of each environment variable?

This second option to validate the variables is optional by the use of a –localVariables switch, just like when we Publish Ssis Variables.

Below is a gist showing the old way and the new way:

So now we have made changes where we test that the json file is valid before we deploy changes, and this is good – previously we could be half way through a deployment before it failed due to a dodgy json file or a missing PowerShell variable. Now we can test it is valid before we deploy, or even connect to SQL, or even before developer checks in a json file. And the error messages are far more pertinent – here is what appears if I set isSensitive to 0 instead of false.

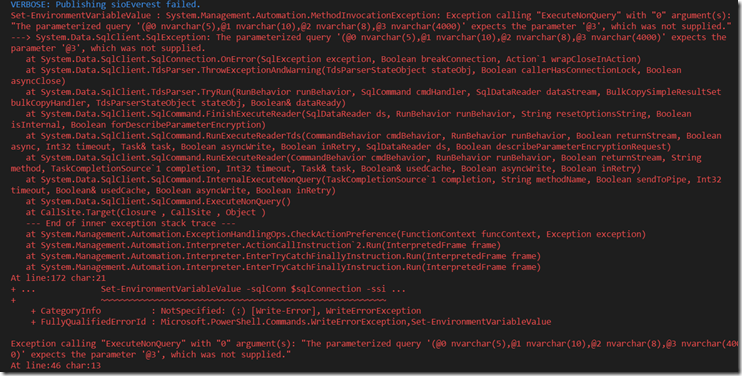

Now if I run the exact same json file with the module when it was at 1.0, and I’ll think you’ll agree that the new way is much more helpful.

We’re also importing the file only once, which again is good as it means we’ve reduced the deploy time. not that importing a json file is a slow process!

These changes were down to some feedback I got and I’m certain they’ll be useful.