Assist Deploy Is Available on GitHub

Hello!

For some time now I have been working on automating SSIS deployments, and earlier this week I published my efforts on GitHub. But before I get into the what/how, let’s focus on the why and let me catch you up on how I got here…

The task to take an ispac and deploy in and of itself is quite a straightforward process as there are multiple ways to do this. For those of you who want the abridged version of the linked post, the choices are as follows:

- Integration Services Deploy Wizard

- SSIS Catalog T-SQL API

- PowerShell

- Custom Utility

All of these have their respective advantages and disadvantages, and even so there’s no “one right way”.

However, there is more than just the deployment of an ispac: a SSIS Solution requires more than just an ispac to get going, including (but not limited to)

- folder

- environment

- environment variable

- environment reference

- setting parameter values

and that’s just the requirements off the top of my head. The good news is that in places like the Integration Services namespace and also the T-SQl API [1]. Tidy. The bad news is that when it comes to deployment artefacts, an ispac itself is only half the thing; the whole of the thing includes those things listed above that, crucially, do not have a deployment artefact anywhere. And so you have two options;

- Ignore it and manually configure everything else. Not necessarily the worst idea if you have a regimented process to do it and it’s fully documented, but most people probably don’t and its relied upon a few heroes to know how. And it’s definitely not the best approach.

- Suck it up and figure out how to automate it. There are samples of how to do it scattered across the internet, but they all fall short of showing how to deploy SSIS in a CI/CD process. Not that they are bad per se.

This problem of deploying folders et al via automation is one that I have seen solved in a variety of ways, with differing levels of success. And I take issue with all other samples I’ve seen online, for a few of reasons:

- Dependencies – exactly 0% of the IT world wants to manage the hassle of software dependencies, and yet exactly 100% of the IT world has to manage the hassle of dependencies. The sample I mentioned already uses the Integration Services namespace. Great. is the dll available on Nuget? Nope. Is it available via a Feature Pack? I will save you the drama of checking them all to tell you now, nope. Is it even available via downloading LocalDB? MSBuild Data Tools must do, surely? Nope, and nope. Essentially the dll is available through either SSMS download, or installing an instance of SQL Server. Neither one of these are appealing to me, because that’s a huge dependency outside of however I’m going to attempt to automate SSIS deployments. And neither of these are trivial to automate a download of.

- And I want to dwell on this point a bit further: when I ask people where are they going to run a deployment from, very few people consider that the box they are running a deployment from will have these things installed. I have worked at a few places where Octopus is used, and frequently there is resistance to have the tentacle software running on a production box, and with good reason: the account requires sysadmin privileges, Octopus Tentacle software may require regular patching, it takes resources away from the rest of the box…. and so the need to have a box that exists solely to run the Tentacle software is not an uncommon thing to see. And so this Tentacle may need all manner of software installed, which is where we’ll either end up in dll hell or constantly patching the box. Not great. Clearly there really is a case to be made for as few dependencies as possible.

- Not desired state – Desired State Configuration (DSC) is not a new concept, yet it is still very hard to get right. In brief, the idea of DSC is to get a targets configuration matching exactly what is stored in source control: think of how security policy will wipe away users that exist in a server group that are not in a list. But a key point of DSC is for scripts to be idempotent: irrespective of how many times they run, they achieve the same result. An easy way to do this is to blow everything away and start afresh every time, but this is a bit brute force and can cause problems; in the case of SSIS deployments,you’d lose history. It also means that deployments are probably going to be longer over time (admittedly not in the case of SSIS Deployments as the longest time to deploy anything is the ispac.)

- Catalog Configuration – by this I mean the name of the folder/environment, the environment variables and how they are mapped to parameters, be they project or parameter. I’ve not really seen a sample that tackles this in a satisfactory manner – they’re just PowerShell variables filled in in a script – how do they get there…. as in – how can anyone take what you’ve written and deploy with minimum changes, and creates a deployment artefact for auditing?

To sum up, I wanted a process that had as few dependencies as possible, is idempotent and provide a deployment artefact that can be stored alongside an ispac, so that we can package the thing and the whole of thing.

With that in mind, earlier this week (and 2 times subsequent from) we released and open sourced AssistDeploy, a PowerShell module that automates the deployment process of an Integration Services solution. I’m going to delve more into how it works, by addressing the three categories raised above.

Dependencies

The PowerShell functions make use of the T-SQL API by creating a connection to SSISDB on the target server via sqldataclient, checking the views for the current state and executing the public stored procedures to update the solution on the instance. SqlDataClient is available in .NET, so the only dependencies that the box needs to execute the PowerShell is

- PowerShell (obviously)

- .NET (which last time I checked seems quite popular)

- The ability to connect to the instance that includes SSISDB. This should be a given for any deployment pipeline.

This means that AssistDeploy will run on pretty much any Windows box built in the past 5 years with no pre-requisites. It should also run on Linux, I honestly don’t know I haven’t checked it, maybe that can be worked on in the future?

Desired State Configuration

The module deploys changes as described above -

- Checks if object exists

- If it does, make no changes

- If it doesn’t, create it

- Verify that object exists.

Pretty straightforward stuff. Check out PublishFolder as an example. Where this gets a little more tricky is environment variables –

- if they exist on the server but are no longer required, we delete them

- If they do not exist on the server but we need them to, we create them

- If they already exist, we need to check that they match what we need them to be

- If value has changed, check data type

- If data type has changed, then drop and re-create, as we cannot change either one of these on their own without risking breaking the attempted update

- if data type is same, update the value

- If protection level has changed, alter to be either sensitive or not – we also need to re-enter the value as if we change something from “sensitive” to “not sensitive” we then need to re-enter the value as value will be blank (or null)

- If description has changed, update it.

- If data type has changed, update it.

- If value hasn’t changed, do nothing.

If you want to see this in action, I refer you to PublishSSisVariables.ps1.

This is where it is clear to see that DSC is a difficult thing to achieve, but by making these checks we reduce the number of changes that are deployed at any one time: it would be far easier to blow them away and re-deploy everything all over again, but change is risk. So by minimising the number of changes we minimise the risk when deploying, even if it does get tricky to manage initially.

Catalog Configuration

OK, this is the big one (not this) – AssistDeploy requires the use of a json file that has been crated specifically to automate the deployment of those objects not in as ispac -

- Folder

- Environment

- Environment Variables

Additionally, the json file is used to map the value of a parameter to an environment variable. This is the template of the json -

The json file should be part of your ssis project. As in, add it to your project and make sure it is included in your nuget package along with your ispac (more on this later).

The json has a section that declares the folder/project/environment/environment description which is used throughout the deployment process. Any parameter, either package or project, will reference an environment variable. So all parameters have parents that are an environment variable. Whether a parameter is a package or project only matters in one sense: a package parameter will have to include a "objectName" string with the value set to the package it exists in. The "value" of each parameter will be the "variableName".

If Values Are Hard-Coded in the json File, How Can I Deploy to Other Environments?

The json is passed to the functions as a PSCustomObject, which is created by the Import-Json function. This function also tests the json file is valid. For json objects under "Integration Services Catalog", the overwrite of the values is quite simple. So if we wish to alter the folder/environment/environment description, there are optional parameters that override what is in the json file. Consult the documentation headers in the functions for more info/examples (e.g. see how PublishSsisFolder calls SetIscProperty if ssisFolderName is present.)

For the values of variables under ssisEnvironmentVariables, the process is only slightly more complex. It is only the value of "value" that will change; this is where the actual value of both environment and parameters is stored. All other values should remain constant. In "Publish-SsisVariables" there is a switch called -localVariables. If this is included when calling the function then the values within the json file will be used when publishing variables to the Integration Services Catalog. However if this switch is not included then a PowerShell variable with the exact same name as the variableName must exist in the same session, and the value of this Powershell parameter overwrites the value in the json file in memory.

To see this in action, I refer you to lines 65-81 in PublishSSisVariables.ps1.

This json File Seems Like A Lot Of Effort To Create...

In deference to the fact that the json file can be quite difficult to create for larger and more complicated SSIS projects, there is a function that will import a project currently on an Integration Services Catalog into the json format required by the module. The idea here is that a developer is working on a local instance, deploying the ispac and creating environment variables for parameters. After they have completed their work they can extract the json and ddeploy locally to verify that hte changes are correct. This means that the json should not have to be written by hand, ever.

A Working Example

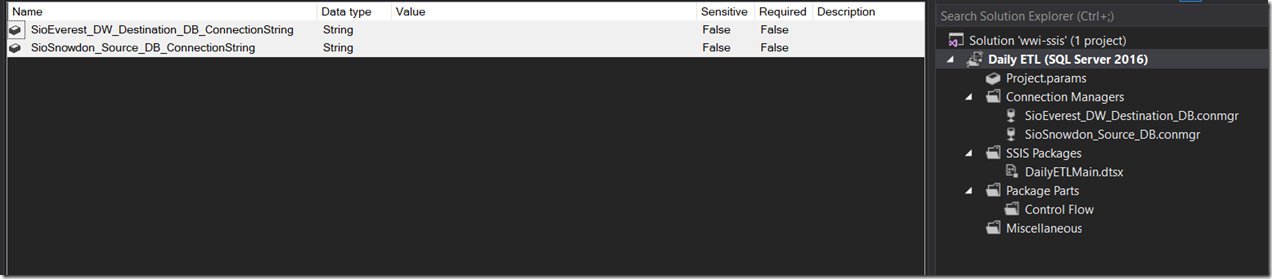

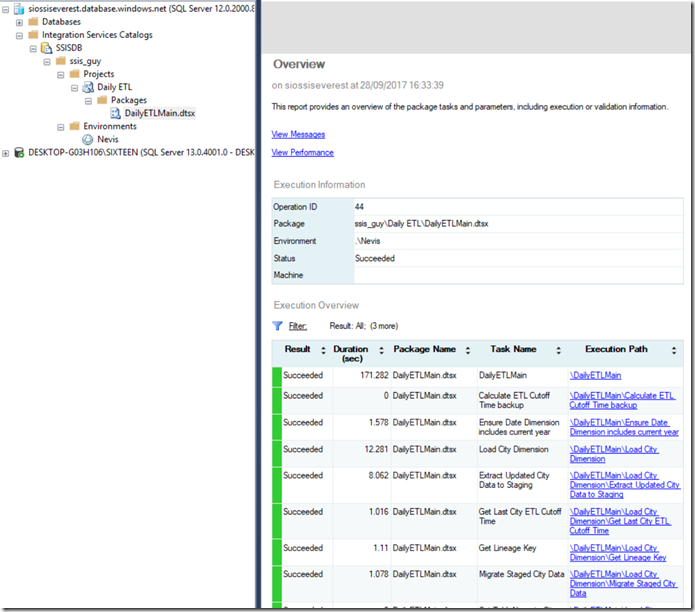

All this is not much help without some sort of demo. So let’s go through an example, which makes use of the latest SSIS Package Execution in Azure. I have here two databases: an OLTP database and a Data Warehouse, and I wish to extract data out of the OLTP database into the Data Warehouse. All this is pretty immaterial though; what I have to deploy is one dtsx package with two project parameters for connections. In the interest of full disclosure, this is the wwi-ssis available on sql-server-samples.

I have the project deployed to my local instance, and so I need to extract the info out of my local SSISDB and output as json.

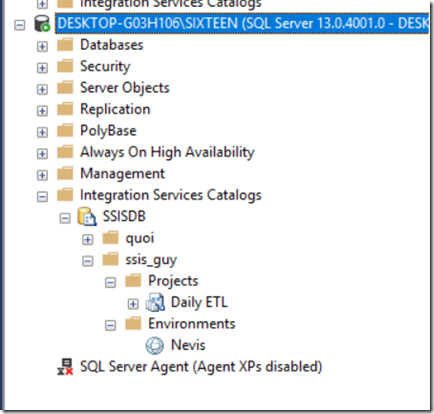

Let’s be clear on a few things:

- the folder is “ssis_guy”

- the project is “Daily ETL”

- the environment is called “Nevis”

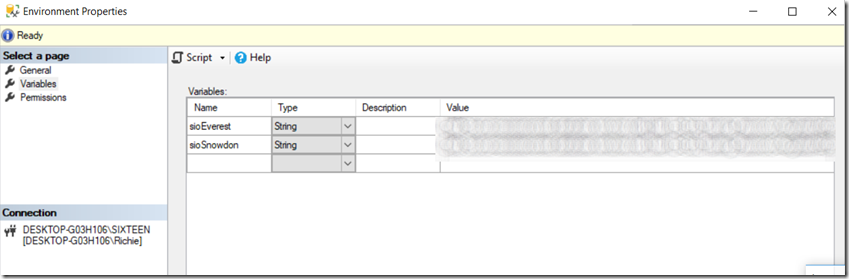

The environment has 2 variables: sioSnowdon and sioEverest. I’ve redacted their values -

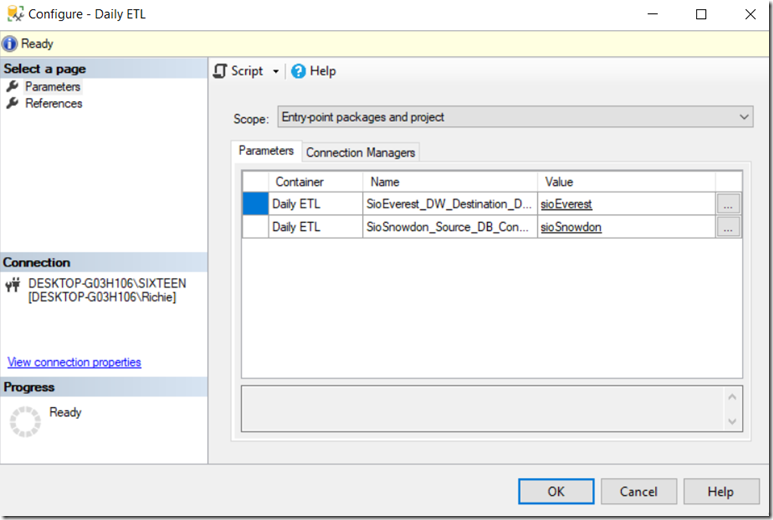

And these are used by the project params -

Ok, let’s extract what we need out of my dev environment. I need to

- import the module

- create the connection string

- create a connection to SSISDB

- set the variables for folder/environment/project/environment description

- set a variable as the output of “Get-SsisAsJson”

- save contents of that to a file

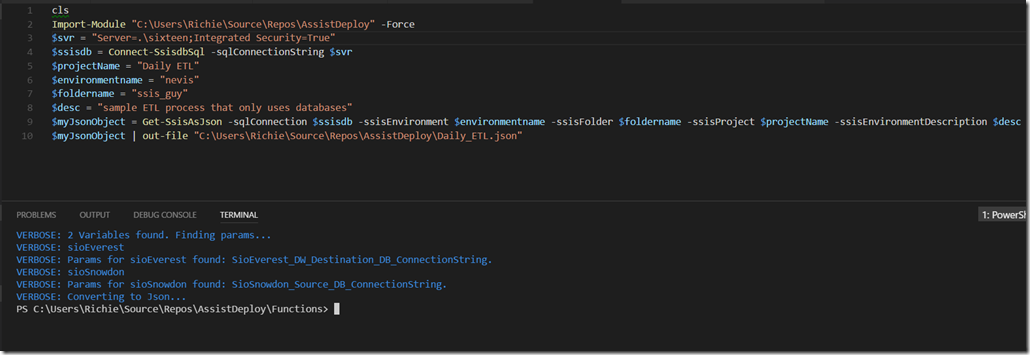

This is the code -

The output shows that two variables were found, as well as the corresponding parameters.

So the json file looks like this, as expected – we have two project parameters, and for each one of those we have an environment variable.

The PowerShell takes care of creating the environment reference between the environment variable and the parameter in SetObjectParameterValue. Note that an environment variable can have multiple parameters… this is an edge case, but say you have copied dtsx packages and added them to your solution, and have package parameters instead of project parameters. If you do have this then it’s horrible, but AssistDeploy will take care of that for you.

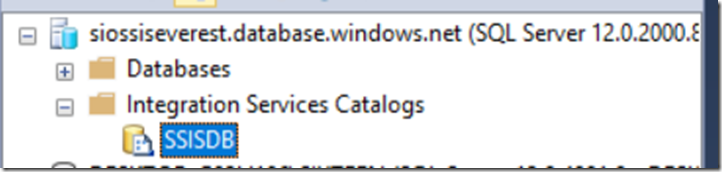

Now all we have to do is run the deployment; I’ll wipe everything off my SQL Azure instance that is hosting SSISDB for Azure Package Execution to get a fresh deploy:

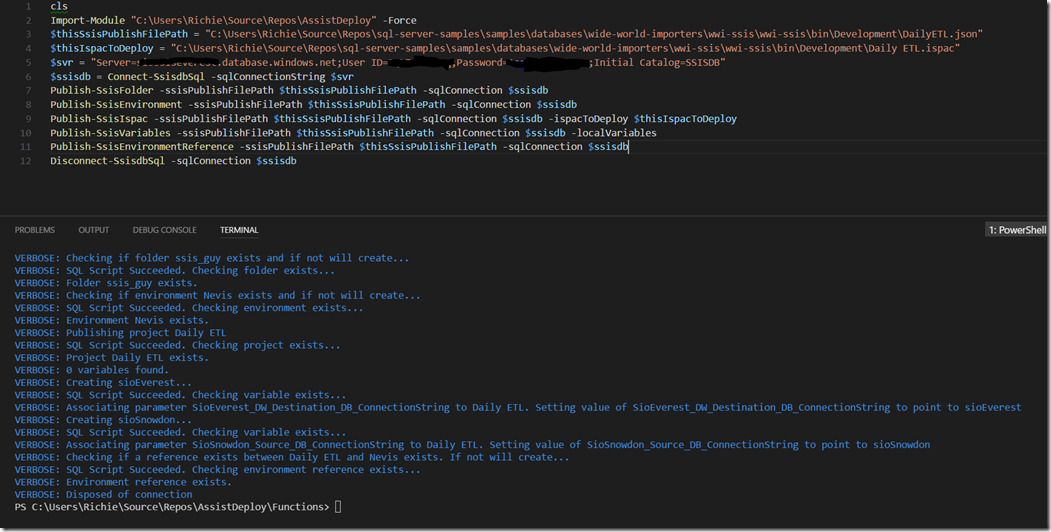

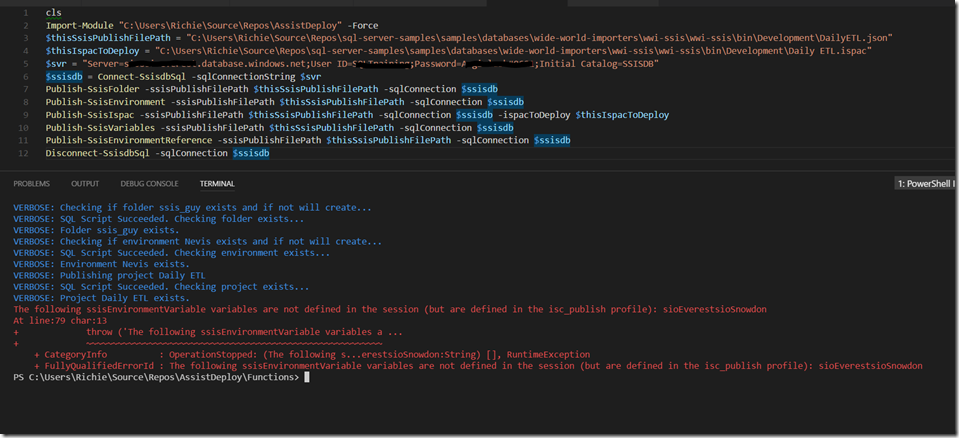

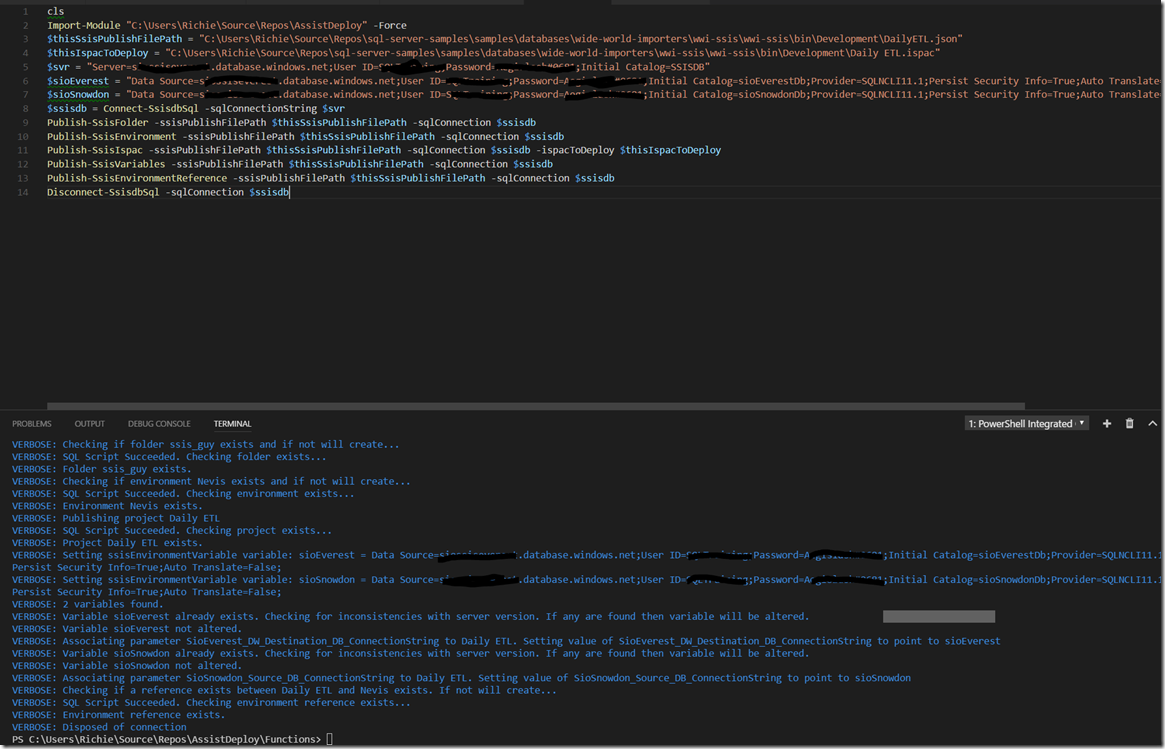

Let’s run a deployment (edit – since this post was published the functions have changed a little, so the screenshots are out of date. The gists are up-to-date)

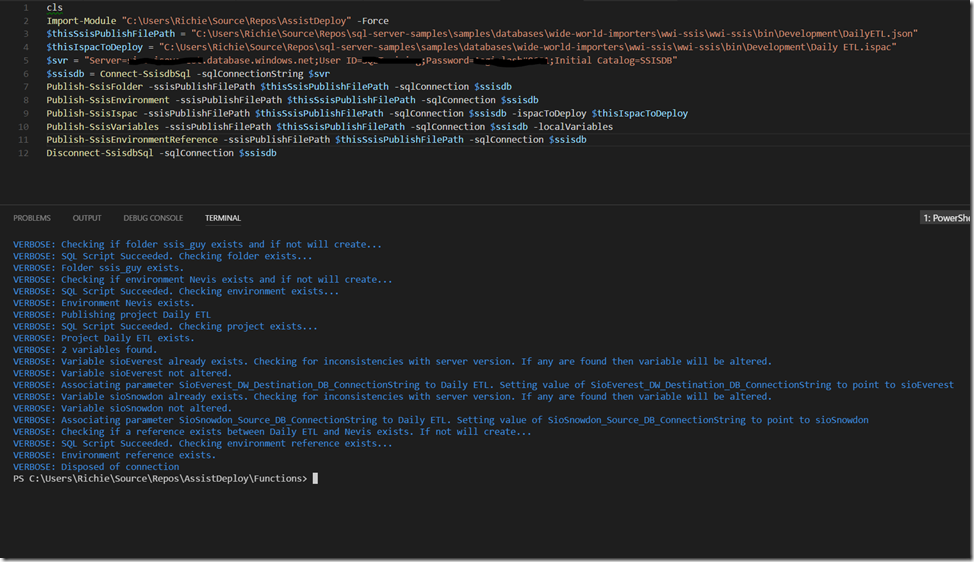

Looking through the logs you can see that it ran through the process, crating each object, including the variables. Let’s re-run and see what outputs - (edit – since this post was published the functions have changed a little, so the screenshots are out of date. The gists are up-to-date)

This time it verifies that the variables already exists and checks for inconsistencies. Say there was then there would be a change, but not in this case. The project now exists on the server. And we can run it to verify that all is present and correct.

What I like about the PowerShell module is that it can be run on a developers box before checking in, and the same module can be used in a deployment process. So it’s easier to check if a deployment should fail before checking in . This is crucial to reducing the number of failed deployments, and gives the developers a quicker turnaround to finding any issues in the process.

Code is below -

It’s important to note that when I am importing the json and publishing the variables I am using a flag called “localVariables”. What this does is take the values stored in the json file to be the ones used when publishing the environment variables. This further aids developers in reducing the amount of effort it takes to test the deploy locally. And to give you an example of how localVariables work, if I took it out on the script above and ran it again, I should expect to get an error: (edit – since this post was published the functions have changed a little, so the screenshots are out of date. The gists are up-to-date)

The module is telling me that I am missing a variable in the PowerShell session. So this blocks me from accidentally deploying a variable stored in the json to another environment. So if I create the variables I need, the deployment should work. (edit – since this post was published the functions have changed a little, so the screenshots are out of date. The gists are up-to-date)

Where Should I Put the Json File?

As the json file is a deployment artefact it should be included as part of the project: create/modify it before you check in any changes, and include it in your nuspec file along with your ispac. That way the corresponding artefacts can be passed around together through your deployment pipeline.

What’s Missing?

Things that are missing and will be added security features, such as crating roles, setting permissions on roles for folder access etc.

Things that probably won’t be included will be creating the SSIS Catalog: if you look at SSIS in Azure for instance, the SSIS Catalog is created via Azure PowerShell. If you look at on-prem, there is a password to create a key. Both of these are outside the scope of connecting to SSISDB, for obvious reasons. If you want these to be automated, you’re best bet is to look at how you automate your infrastructure deployments rather than at this level.

Summary

This module has taken quite some time to get to this state, and it’s at a place where teams can start using to further automate their deployment process. There are still a few missing features that need to be added, but when you look back on the options presented earlier between manually deploying everything but the isapc, and sucking it up and automating deployment, I feel confident in saying that we are a lot closer to a fully automated process than a manual process.

I’m very keen for others to start using and making suggestions, so by all means download the repo and create pull requests!

[1] But you know that when someone say that there’s good news there is the diametrically opposed bad news. Wait for it…)