SSDTPokedex: Migrating a Database Into SSDT

Hello!

If you want to have the best chance of something being successful, you have to be committed to it from the start. That’s a pretty fatuous sounding statement, almost as bad as “to make something better you have to do more of the good stuff and less of the bad stuff”, so let me contextualise: if you desire to have good testing coverage on an application, then you need to be serious about testing form the first day you write code for the application. Be it manual or automated testing, you need to put the effort in terms of time and money to achieve test coverage. There’s no point in waiting six months then bemoaning the quality and then start writing tests, because you will always be playing catch-up.

The same argument can also be said for build/deployment: if you get tired of manual deployments that never work and are never the same in any two environments, then you have to be serious about where your source code lives (if indeed you have even committed to using a VCS) and how you deploy right from the beginning. A relatively simple application can grow from a web server/database backend into using SSIS, SSRS or even general Azure goodness. And the more moving parts the more complex a deployment is.

And so obviously the more you put off putting any sort of DevOps practice into action, the more effort you have to put in later, or rather, the more Technical Debt you put in the backlog. And database source control build/deploy etc. is hard. But I posit that it is possible to take an old database that doesn’t even run on SQL Server and manage to migrate it over into an SSDT project and get it compiling successfully relatively quickly. Note that this is not a perfect solution, because there is a great deal more refactoring required to make the database decent rather than “just get it compiling”, but it is something to get started with; an attempt at establishing gold code/a single point of truth.

So the sample database I am going to use is one that has been around for many years. Actually it’s not even a sample database, it’s one that is the basis for many applications/websites out there. It’s called the Pokedex, and contains all the data used for the Pokémon games released on Nintendo’s handheld devices. As I said the database has been around for some time, 8 years in fact, and over that time there have been considerable changes to the games, and so the database has evolved to accommodate these changes. There were design decisions made years ago that couldn’t anticipate changes in later generations. And because this database has been migrated form another database engine, there are features that are different: for example by default all indexes in SQLite are non-clustered, except for those that had the table created without the UNIQUEROW (or something like that), so there’s going to be discrepancies that we’ll have to repair. And so this database is exactly what you would expect to find in the real world, and the challenges faced with getting a database that’s been in the wild in source control. And I haven’t even mentioned the static data that needs to be loaded somehow!

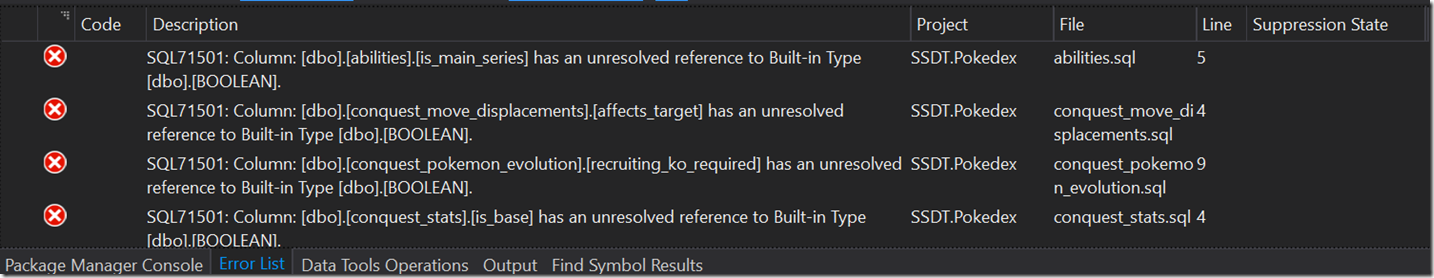

The Pokédex is available on GitHub right now and is available for three different database engines: PostgreSQL, SQLite and MySQL. Crucially, there is no SQL Server version. And so with a bit of effort I followed the Getting Data wiki article and managed to load the data into a SQLite database. This was not the box of fluffy ducks the wiki site makes it out to be as I was using a VM and so had to install all the pre-requisites, load paths via SETX and other general goodness before I was able to extract the schema and the data out of the SQLite version, load the schema into an SSDT project and publish the project to GitHub. Other than the setup and extracting the data, importing a database into SSDT is really quite trivial, it’s the refactoring to get it into a decent state that takes the effort! For example, the first release I’ve tagged does not compile. And this is because some of the columns are declared as the BOOLEAN data type, which is non existent in SQL Server, but is a valid data type in SQLite.

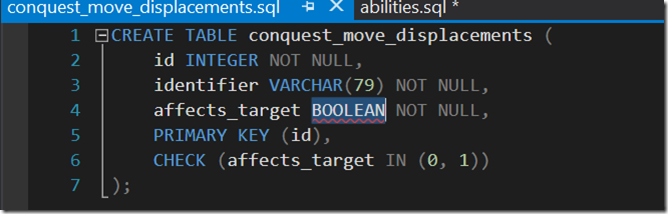

So let’s get th3ese changed to BIT and try again. By double tapping on the error in the Error List I can go straight to the declaration and alter the code.

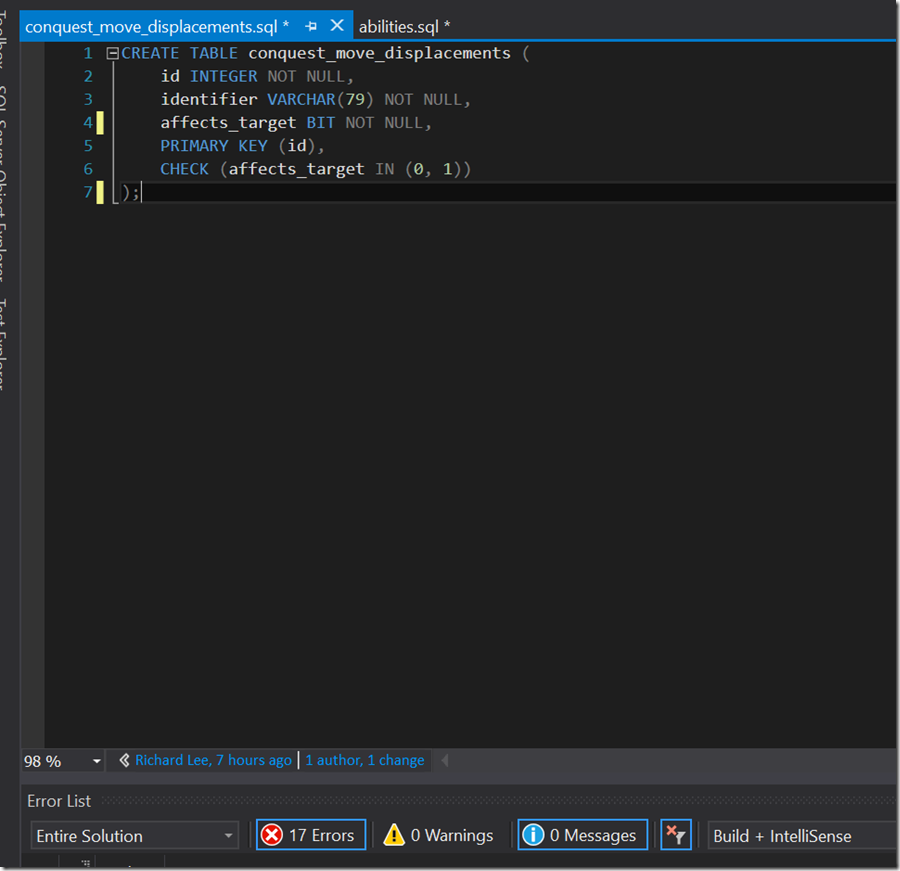

Alter to a BIT, and just like that, the error disappears from the Error List. Only 17 more to go…

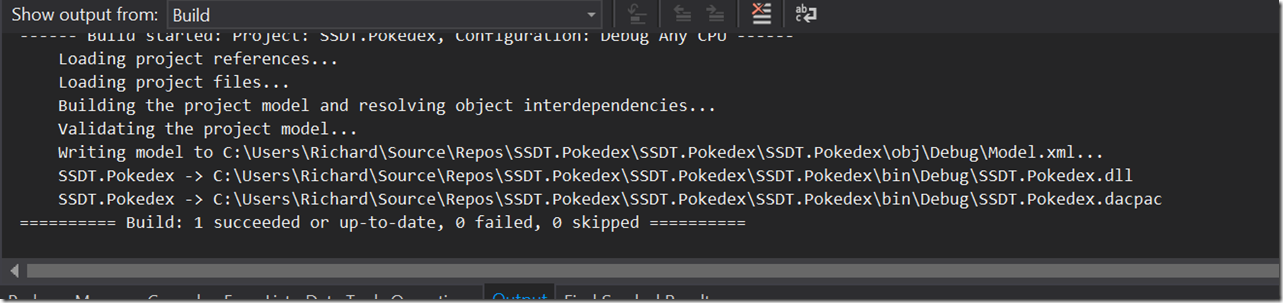

Now I’ve cleared all the errors I build again and boom! A successful build with a DACPAC to show for my efforts. Now just to check in and we now have a working release (1.0.0)

Over the coming weeks I’ll be making changes to the project in GitHub and posting my progress here, some of the challenges I faced, how SSDT worked in unexpected ways etc. This is because though we have a DACPAC, we still have many issues to contend with: there’s no names for either Primary or Foreign Keys, schemas are non-existent, reserved keywords in SQL Server are used as column names, there’s no proper way to get the static data loaded, and the data itself only goes up to Gen 6 (meaning Get 7/Sun/Moon data is not included.) And I’ve not even gota deployment pipeline/environments/tests setup! But it should make for an interesting case study.