SSDT 16.5 Released

Hello!

Recently the SQL Tools Team released a new version of both SQL Server Data Tools (SSDT) and SQL Server Management Studio (SSMS.) There’s a range of bug fixes, but two new features that I am particualrly interested in.

Firstly, SQLPAckage.exe and the DacFx API can now generate deployment report, deployment script and publish to a datbase all in one action. Neat! This is useful because it’s important to keep track of exactly what has changed on a database. Of course there’s nothing stopping you right now from creating executin these options in three separate actions, but there are a few issues with this approach:

- Actually writing these operations out for either an ad-hoc process or even for an automated process is time consuming and esepcially tedious for an ad-hoc/casual script.

- In the time taken to generate a report, generate script and execute the the deployment, something may have changed in the target database. Admittedly this is not very likely if these operations happen right after one another, but if there a pause for any reason then there’s achance that the deployment report or script may be different from what is actually deployed. This can cause problems.

So by executing the three actions all in one step, we can fully audit what changes were actually made with confidence. To get either the script or the report generated, there are two new optional parameters parameters:

- /DeployReportPath

- /DeployScriptPath

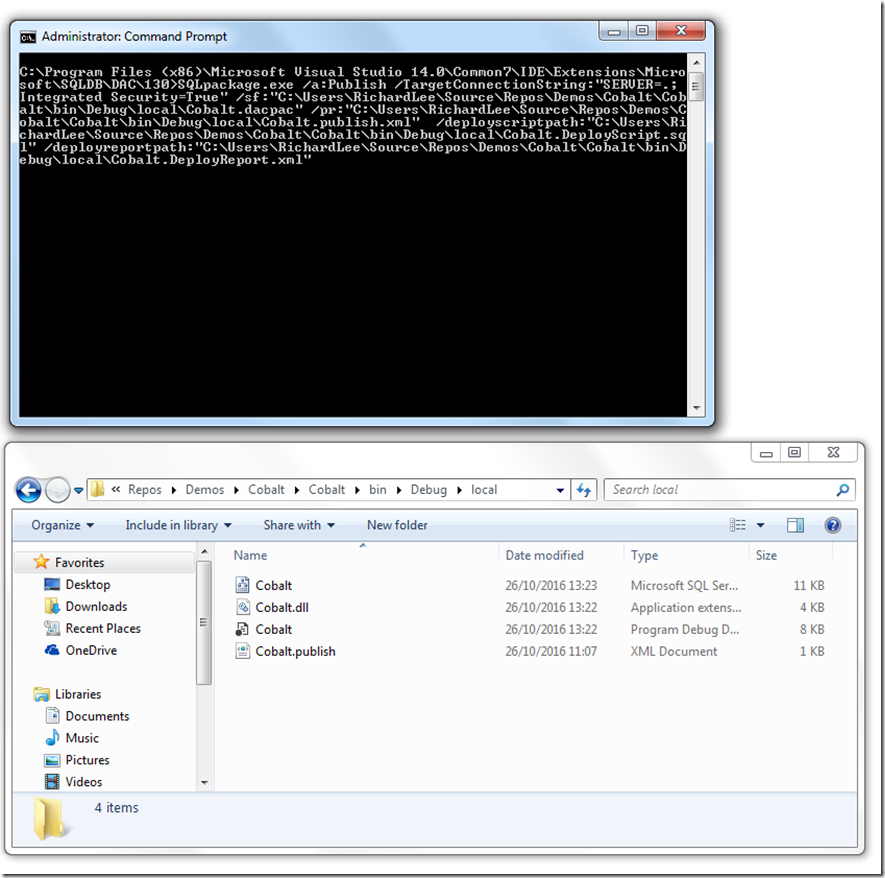

let’s see both these in action for a local deployment. The commandline I needed to run is below:

SQLpackage.exe /a:Publish /TargetConnectionString:"SERVER=.; Integrated Security=True" /sf:"C:\Users\RichardLee\Source\Repos\Demos\Cobalt\Cobalt\bin\Debug\local\Cobalt.dacpac" /pr:"C:\Users\RichardLee\Source\Repos\Demos\Cobalt\Cobalt\bin\Debug\local\Cobalt.publish.xml" /deployscriptpath:"C:\Users\RichardLee\Source\Repos\Demos\Cobalt\Cobalt\bin\Debug\local\Cobalt.DeployScript.sql" /deployreportpath:"C:\Users\RichardLee\Source\Repos\Demos\Cobalt\Cobalt\bin\Debug\local\Cobalt.DeployReport.xml"

In the folder at the moment all I have is the build output from a Visual Studio build.

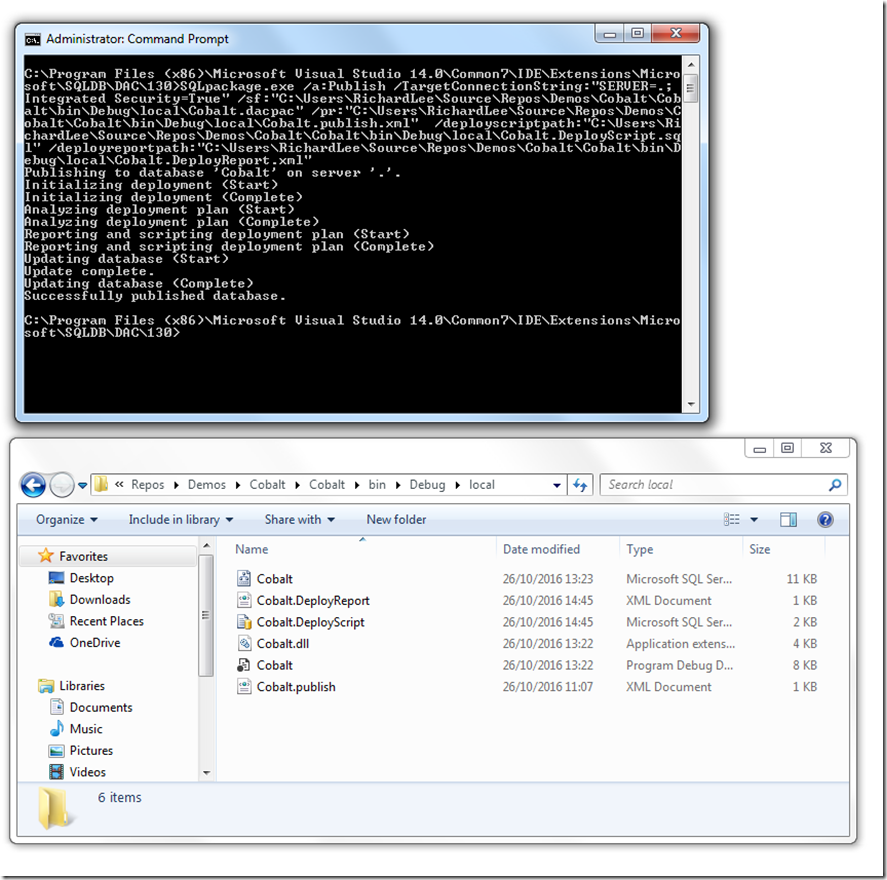

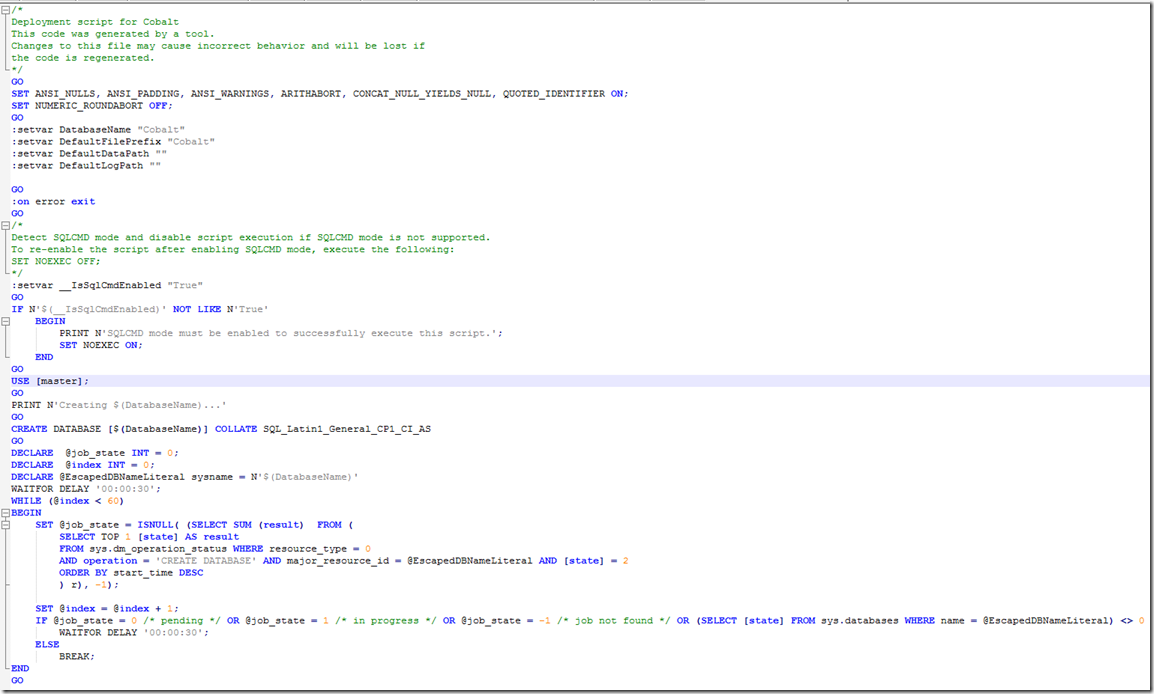

Now after I have executed the command I now have my two artefacts: a script file and a deploy report. And by digging into the script and report I can see what was deployed and verify against the server if I want to. Tidy!

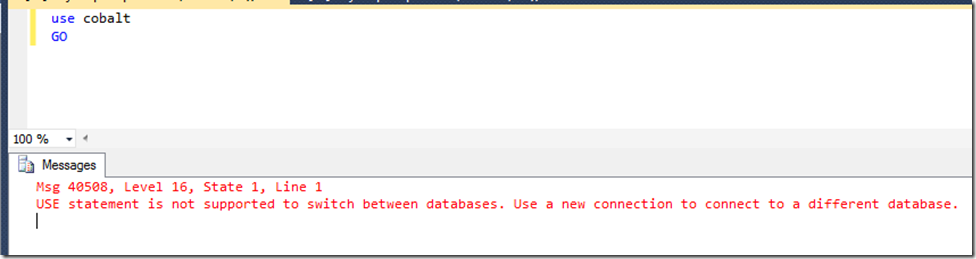

The neat thing about these options is that they also work for Azure deployments. After all, why wouldn’t they? But, and this is the second feature I want to talk about, when I create the script and publish to Azure, the SQL Tools Team have implemented have implemented a script to be created/published against both the master database and the user database. The reason for this is when you create connection to Azure, you cannot change the database you initially connected to, and there are plenty of things you need to do in master to support an Azure database, like creating the thing for a start! Clearly from an SSDT point of view this always presented an issue in Azure in that we wanted to connect to the master databaase, create the use database then switch connection. So now when you are deploying to Azure SSDT will now create two separte files:

- The user database script

- The master database script

There are of course alternatives to creating the database instead of using SSDT: You could havea pre-model script to create the database, or you could use a Deployment Contributor, or in the Respource Manager template for the server you could have the database created. But all this implies “magic”: that is, something outside of the databaase which requires extra effort to deploy. And the more moving parts the more likely something is to break. Additionally, consuming a dacpac as a fully integrated database becomes that much harder to achieve if extra effort is required to deploy hte database.

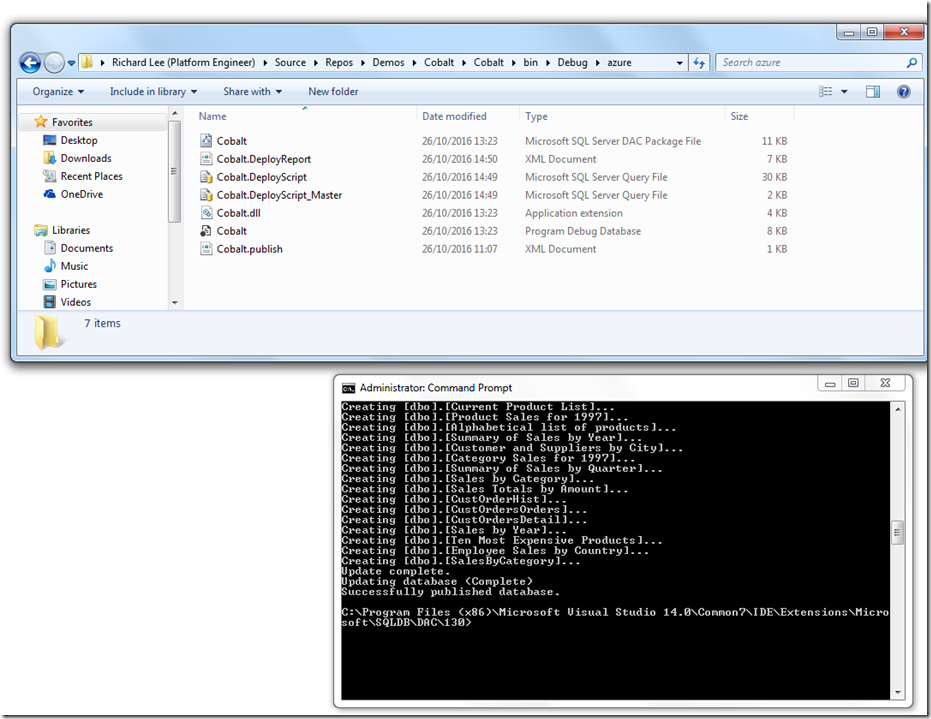

And execute them against the SQL Azure server. And what I really like about this is that no additional effort is required form our part! Below I have executed the exact same command as above, save for the connection string to point to a SQL Azure instance. And you can see, there is a script suffixed with “master”.

And if we open that up all that is in the script is the tasks that must be deployed against the master database:

So now we have deployments to azure working that much more smoothly.