Improving Azure Functions throughput

I recently ran into an Azure Functions throughput problem which I logged on Stack Overflow as regular-throughput-troughs-in-azure-functions-requests-per-second. The product group were pretty quick to respond and pointed me to their Azure App Service Team Blog post processing-100000-events-per-second-on-azure-functions/. The post lists five notable configuration choices:

- functions process [event hubs] messages in batches

- webJobs dashboard is disabled in favor of using Application Insights for monitoring and telemetry

- each event hub is configured with 100 partitions

- data is sent to the event hubs without partition keys

- events are serialized using protocol buffers

Of these, the second and third are most interesting. This is because my function app is designed to perform a discrete action per event rather than consume a batch of events. Partition key configuration is not applicable, since I use Azure Function bindings to manage my Event Hubs interaction and therefore get it for free. As for protocol buffers, well I simply do not need that sort of performance.

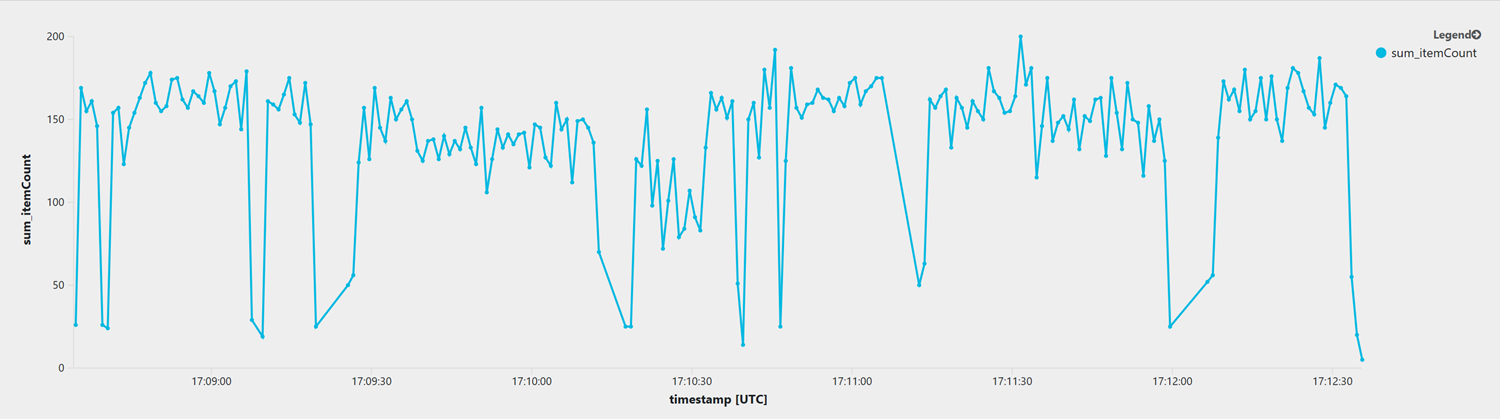

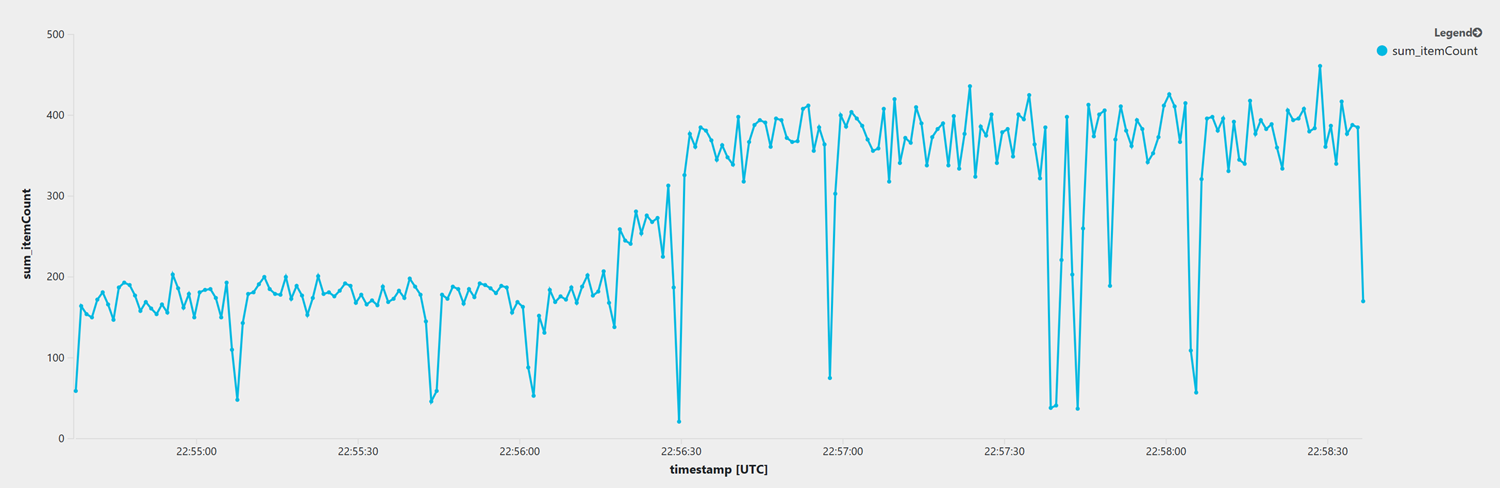

The baseline throughput

The image below shows the baseline throughput (requests/sec) that I want to improve upon.

While the throughput is more than adequate, the frequent troughs piqued my interest. Let’s see what can be done.

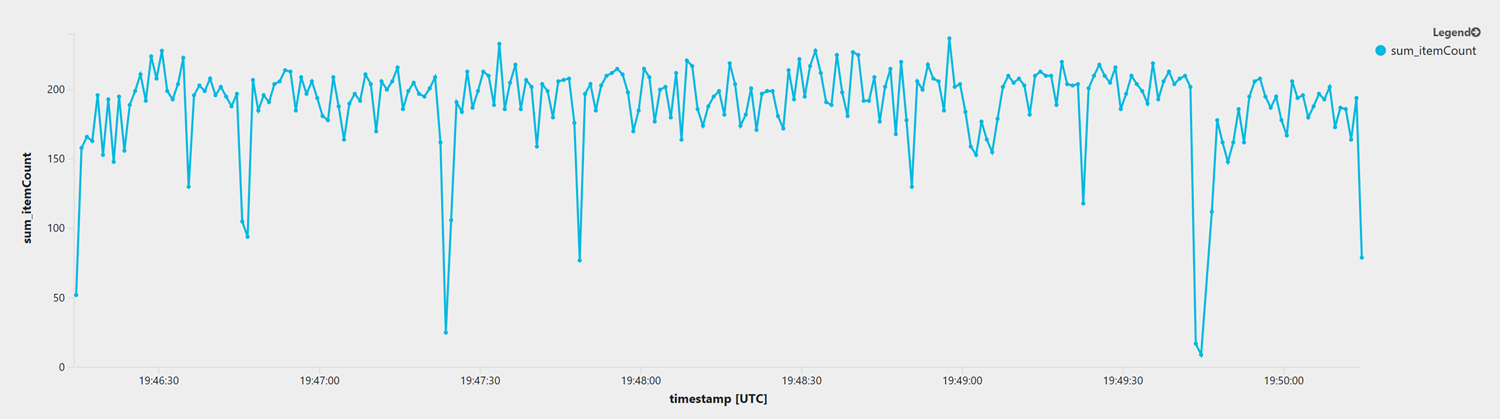

Azure webjobs dashboard

By default an Azure Function is configured to emit to the Azure webJobs dashboard via the AzureWebJobsDashboard application setting. The setting can be disabled by deleting it entirely, by deleting its value or renaming the setting name, for example, AzureWebJobsDashboard_disabled, which is the option I chose. Once the application settings have been saved the function app should be restarted for the change to take effect.

Do not disable the AzureWebJobsStorage setting. This is a required setting for all but http triggered functions, the absence of which will result in the function application failing to start with the error

The following 1 functions are in error: Run: Microsoft.Azure.WebJobs.Host: Error indexing method 'ThroughputTest.Run'. Microsoft.WindowsAzure.Storage: Value cannot be null. Parameter name: connectionString.

The image below shows the throughput (requests/sec) for a comparative workload with the Azure webjobs dashboard disabled. A clear improvement.

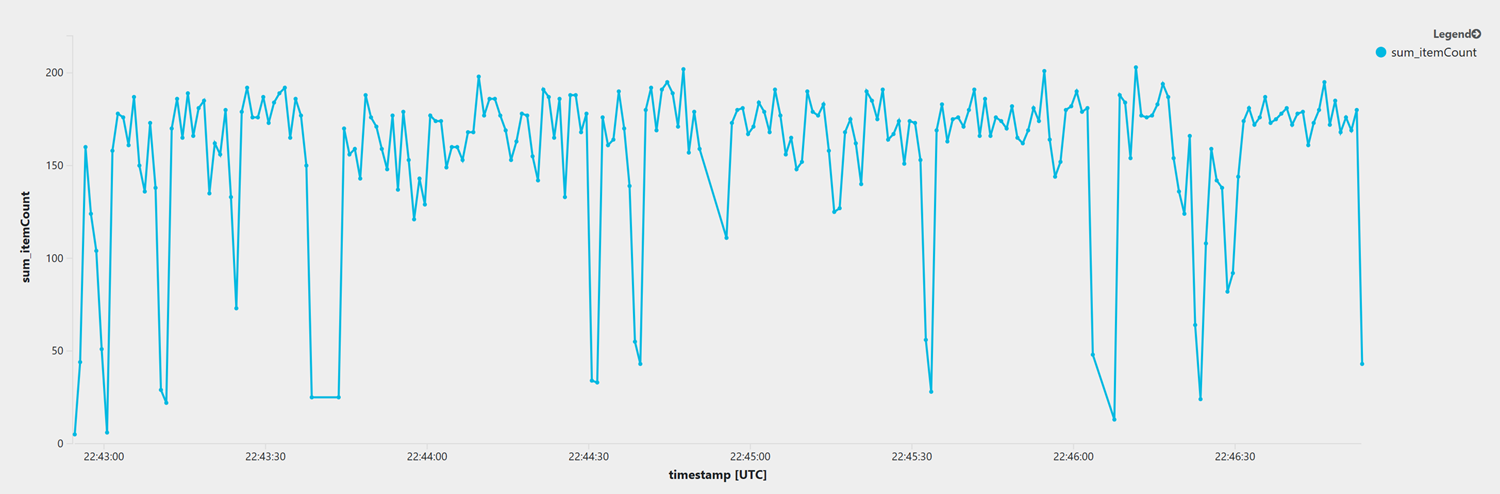

Event hub partitions

It turns out that the number of event hub partitions directly influeces an Azure function’s ability to scale out. If, for example, an event hub has two partitions then only two VMs can process messages at any given time i.e. the partition count puts an upper limit on function scalability.

The image below shows the throughput (requests/sec) for a comparative workload where

- Event Hubs partition count increased to 4 from 2

- Azure webjobs dashboard re-enabled.

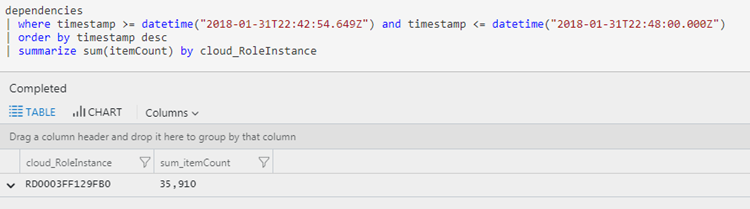

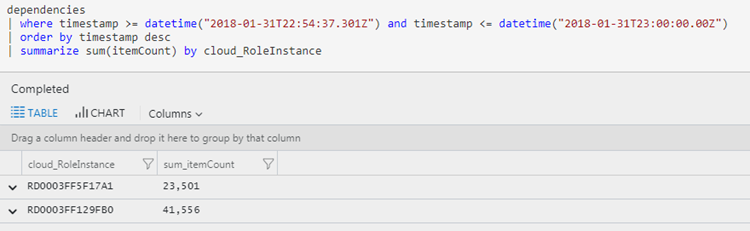

This result is pretty much as per the baseline, which was not expected. The number of VMs can be confirmed by the followig App Insights Analytic query

I repeated the above for a variety of event hub configurations and workloads, i.e. 2x, 5x, 10x, to no avail. The Product Group confirmed scale out is automatic and dependant on workload, and other than configuring the partition count that no other event hub configuration was required. I went back to my code and indeed a stateful bug where stateless is the goal!

I re-ran my comparative workload with Event Hubs partition count at 4 and the Azure webjobs dashboard disabled.

By disabling the Azure webjobs dashboard the throughput was sufficient to cause the function to scale out and approach nearly double. We can see this via the analytics query show below.

In Summary

The advice given by the Azure Function team in their post processing-100000-events-per-second-on-azure-functions/ is easy to implement and clearly gives an uplift in throughput. I certainly prefer App Insights over the webapps dashboard and so that is a no brainer. By the same token, it is easy to flood App Insights with messages and this will be a problem if running the free version, have a low telemetry cap or not configuring the telemetry to send, see my previous post managing-azure-functions-logging-to-application-insights/.

I admit to being a newbie at Azure Functions and really the challenge to scale is not writing the function suitablly in the first instacne. My mistake was implementing state, which simply will not work. New VMs are allocated as the function scales, and within a function app the VM serving each function can be different. Sure, we can implement state in a database, but I think that misses the point.